- This post is on what I consider to be the most pressing problem in the world today.

- I lay out the theory underpinning information pollution, the significance and trajectory of the problem, and propose solutions (see bullets at the end).

- I encourage you to persist in reading this post, so that we can all continue this important conversation.

Introduction

Technological innovation and growth of a certain kind are good, but I want to explain why risk mitigation should be more of a priority for the world in 2018. Without appropriate risk mitigation, we could upend the cultural, economic and moral progress we have enjoyed over the last half-century and miss out on future benefits.

One particular risk looms large, we must urgently address the threat of information pollution and an ‘infopocalypse’. Cleaning up the information environment will require substantial resources just like mitigation of climate change. The threat of an information catastrophe is more pressing than climate change and has the potential to subvert our response to climate change (and other catastrophic threats).

In a previous post, I responded to the AI Forum NZ’s 2018 research report (see ‘The Good the Bad and the Ugly’). I mentioned Eliezer Yudkowsky’s notion of an AI fire alarm. Yudkowsky was writing about artificial general intelligence, however, it’s now apparent that even with our present rudimentary digital technologies the risks are upon us. ‘A reality-distorting information apocalypse is not only plausible, but close at hand’ (Warzel 2018). The fire alarm is already ringing…

Technology is generally good

Technology has been, on average, very good for humanity. There is almost no doubt that people alive today have lives better than they otherwise would have because of technology. With few exceptions, perhaps including mustard gas, spam and phishing scams, arguably nuclear weapons, and other similar examples, technology has improved our lives.

We live longer healthier lives, are able to communicate with distant friends more easily, and travel to places or consume art we otherwise could not have, all because of technology.

Technological advance has a very good track record, and ought to be encouraged. Economic growth has in part driven this technological progress, and economic growth facilitates improvements in wellbeing by proxy, through technology.

Again, there are important exceptions, for example where there is growth of harmful industries that cause damage through externalities such as pollution, or through products that make lives worse, such as tobacco or certain uses of thalidomide for example.

The Twentieth Century however, with its rapid growth, technological advance, relative peace, and moral progress was probably the greatest period of advance in human wellbeing the world has experienced.

Responsible, sustainable growth is good

The key is to develop technology, whilst avoiding technologies that make lives worse, and to grow while avoiding threats to sustainability and harm to individuals.

Ideally the system should be stable, because the impacts of technology and growth compound and accumulate. If instability causes interruption to the processes, then future value is forgone, and the area under the future wellbeing curve is less than it otherwise would have been.

Economist Tyler Cowen explains at length in his book Stubborn Attachments. Just as opening a superannuation account too late in life can forgo a substantial proportion of potential future wealth, delayed technological development and growth can forgo substantial wellbeing improvements for future people.

Imagine if the Dark Ages had lasted an extra 50 years, we would presently be without the internet, mobile phones, coronary angiography and affordable air travel.

To reiterate, stability of the system underpins the magnitude of future benefit. There are however a number of threats to the stability of the system. These include existential threats (which would eliminate the system) and catastrophic risks (which would set the system back, and so irrevocably forgo future value creation).

Risk mitigation is essential

The existential threats include (but are not limited to): nuclear war (if more than a few hundred warheads are detonated), asteroid strikes, runaway climate change (the hothouse earth scenario), systematic extermination by autonomous weapons run amok, an engineered bioweapon multistrain pandemic, geoengineering experiment gone wrong, and assorted other significant threats.

The merely catastrophic risks include: climate change short of hothouse earth, war or terrorism short of a few hundred nuclear warhead detonations, massive civil unrest, pandemic influenza, system collapse due to digital terror or malfunction, and so on.

There is general consensus that the threat of catastrophic risk is growing, largely because of technological advance (greenhouse gases, CRISPR, power to size warhead improvements, dependence on just in time logistics…). Even a 0.1% risk per year, across ten catastrophic threats, makes one of them almost inevitable this century. We need to make sure the system we are growing is not only robust against risk, but is antifragile, and grows to strengthen in response to less severe perturbations.

Currently it does not.

Although we want to direct many of our resources toward technological growth and development, we also need to invest a substantial portion in ensuring that we do not suffer major setbacks as a result of these foreseeable risks.

We need equal measures of excited innovation and cautious pragmatic patience. We need to get things right, because future value (and even the existence of a future) depends on it.

We must rationally focus and prioritize

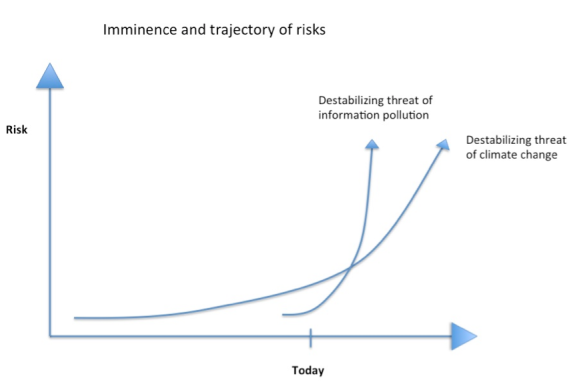

There are a range of threats and risks to individuals and society. Large threats and risks can emerge at different times and grow at different rates. Our response to threats and risks needs to be prioritized by the imminence of the threat, and the magnitude of its effects.

It is a constant battle to ensure adequate housing, welfare, healthcare, and education. But these problems, though somewhat important (pressing and a little impactful), and deserving of a decent amount of focus, are relatively trivial compared with the large risks to society and wellbeing.

Climate change is moderately imminent (significant temperature rises over the next decades) and moderately impactful (it will cause severe disruption and loss of life, but it is unlikely to wipe us out). A major asteroid strike is not imminent (assuming we are tracking most of the massive near earth objects), but could be hugely impactful (causing human extinction).

The Infopocalypse

I argue here that the risks associated with emerging information technologies are seriously imminent, and moderately impactful. This means that we ought to deal with them as a higher priority and with at least as much effort as our (woefully inadequate) efforts to mitigate climate change.

To be clear, climate change absolutely must be dealt with in order to maximize future value, and the latest IPCC report is terrifying. If we do not address it with sufficiently radical measures then the ensuing drought, extreme weather, sea level rises, famine, migration, civil unrest, disease, an so on, will undermine the rate of technological development and growth, and we will forgo future value as a result. But the same argument applies to the issue of information pollution. First I will explain some background.

Human information sharing and cognitive bias

Humanity has shown great progress and innovation in storing and packaging information. Since the first cave artist scratched an image on the wall of a cave, we have continued to develop communication and information technologies with greater and greater power. Written symbols solved the problem of accounting in complex agricultural communities, the printing press enabled the dissemination of information; radio, television, the internet, and mobile phones have all provided useful and life enhancing tools.

Humans are a cultural species. This means that we share information and learn things from each other. We also evolve beneficial institutions. Our beliefs, habits and formal routines are selected and honed because they are successful. But the quirks of evolution mean that it is not only ideas and institutions that are good for humanity that arise. We have a tendency for SNAFUs.

We employ a range of different strategies for obtaining relevant and useful information. We can learn information ourselves through a trial and error process, or we can learn it from other people.

Generally, information passed from one generation to the next, parent to child (vertical transmission), is likely to be adaptive information that is useful for navigating the problems the world poses. This is because natural selection has instilled in parents a psychological interest in preparing their children to survive and these same parents, holders of the information, are indeed alive.

Information that we glean from other sources such as our contemporaries (horizontal transmission) does not necessarily track relevant or real problems in the environment, nor necessarily provide us with useful ways to solve these problems. Think of the used car salesperson explaining to you that you really do need that all-leather interior. Think of Trump telling welfare beneficiaries that they’ll be better off without Medicare.

Furthermore, we cannot attend to all the information all the time, and we cannot verify all the information all the time. So we use evolved short cuts, useful heuristics that have obtained for us, throughout history and over evolutionary time, the most useful information there is. Such simple psychological rules as ‘copy talented and prestigious people’, or ‘do what everyone else is doing’, have generally served us well.

Until now…

There are many other nuances to this system of information transmission, such as the role of ‘oblique transmission’ e.g. from teachers, the role of group selection for fitness rather than individual selection, the role of the many other cognitive biases besides the prestige biased information copying and frequency-dependent copying just mentioned. And there is also the appeal of the content of the information itself, does it seem plausible, does it fit with what is already believed, have a highly emotive aspect, or is simple to remember?

The key point is that the large-scale dynamics of information transmission depend on these micro processes of content, source, and frequency assessment (among other processes) at the level of the individual.

All three of these key features can easily be manipulated, at scale, and with personalization, by existing and emerging information technologies.

Our usually well-functioning cognitive short-cuts can be hacked. The advertising and propaganda industries have realized this for a long time, but until now their methods were crude and blunt.

The necessity of true (environmentally tracking) information

An important feature of information transmission is that obtaining information imposes a cost on the individual. This cost can be significant due to the attention required, time and effort spent on trial and error, research, and so forth.

It is much cheaper to harvest information from others rather than obtain it yourself (think content producers vs content consumers). Individuals who merely harvest free information without aiding the production and verification of information are referred to in cultural and information evolution models as ‘freeriders’.

Freeriders do very well when environments are stable, and the information in the population tracks that environment, meaning that the information floating around is useful for succeeding in the environment.

However, when environments change, then strategies need to change. Existing information biases and learning strategies, favoured by evolution because, on average, they obtain good quality information, may no longer track the relevant features of the environment. These existing cognitive tools may no longer get us, on average, good information.

Continuing to use existing methods to obtain or verify information when the game has changed can lead individuals and groups to poor outcomes.

We are seeing this in the world today.

The environment for humanity has been changing rapidly and we now inhabit a world of social media platform communication, connectivity, and techniques for content production, which we are not used to as a species. Our cognitive biases, which guide us to trust particular kinds of information are not always well suited to this new environment, and our education systems are not imbuing our children with the right tools for understanding this novel system.

As such, the information we share is no longer tracking the problem space that it is meant to help us solve.

This is particularly problematic where ‘consume only (and do not verify)’ freeriders are rife, because then those that create content have disproportionate power. Those who create content with malice (defectors) have the upper hand.

The greater the gap between the content in the messages and the survival and wellbeing needs of the content consumers, the greater the risk of large scale harm and suffering across time.

If we don’t have true information, we die.

Maybe not today, maybe not tomorrow, but probabilistically and eventually.

Because fundamentally that is what brains are for, they are for tracking true features of the environment and responding to them in adaptive ways. The whole setup collapses if the input information is systematically false or deceptive and our evolved cognitive biases persist in believing it. The potential problem is immense. (For a fuller discussion see Chapter 2 of my Masters thesis here).

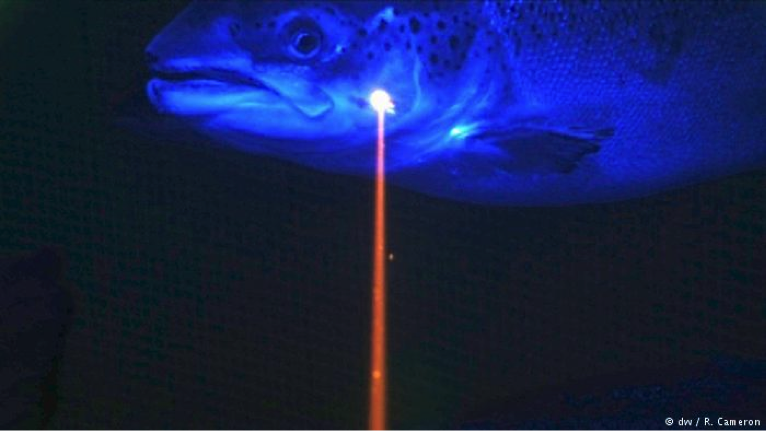

How information technology is a threat: laser phishing and reality apathy

Information has appeal due to its source, frequency or content. So how can current and emerging technological advances concoct a recipe for disaster?

We’ve already seen simple hacks and unsophisticated weaponizing of social media almost certainly influence global events for the worse, such as the US presidential election (Cambridge Analytica), the Brexit vote, the Rohingya genocide, suppression of women’s opinions in the Middle East, and many others. The Oxford Computational Propaganda Project catalogues these.

These simple hacks involve the use of human trolls, and some intelligent systems for information creation, testing and distribution. Common techniques involve bot armies to convey the illusion of frequency, thousands of versions of advertisements with reaction tracking to fine tune content, and the spread of fake news by ‘prestigious’ individuals and organizations. All these methods can be used to manipulate the way information flows through a population.

But this is just the tip of the iceberg.

In the above cases a user who is careful will be skeptical of much of the information presented by carefully comparing the messages received to the reality at large (though this involves effort). However, we are rapidly entering an era where reality at large will be manipulated.

Technology presently exists that can produce authentic sounding human audio, manipulate video seamlessly, remove or add individuals to images and video, create authentic looking video of individuals apparently saying things they did not say, and a wide range of other malicious manipulations.

A mainstay of these techniques is the use of generative adversarial networks (GANs), a kind of artificial intelligence that deploys machine learning to first categorize the world, then produce new content and refine that content until it is indistinguishable from the training dataset, and yet did not exist in the training dataset. Insofar as we believe video, audio and images document reality, GANs are starting to create reality.

Targeting this new ‘reality’ in the right ways can exploit the psychological biases, which we depend on in order to attend to what ought to be the most relevant and important information amid a sea of content.

We are all used to being phished these days. This is where an attempt is made to deceive us into acting in the interests of a hostile entity through the use of (often) an email or social media message. Phishing is often an attempt to obtain personal information, but can manifest as efforts to convince us to purchase products that are not in our interests.

The ‘Nigerian scams’ were some of the first such phishes, but techniques have advanced well beyond ‘Dear esteemed Sir or Madam…’

Convincing us of an alternate reality is the ultimate phish.

Laser phishing is the situation where the target is phished but the phish appears to be an authentic communication from a trusted source. Perhaps your ‘best friend’ messages you on social media, or your ‘boss’ instructs you to do something.

The message reads exactly as the genuine article, along with tone, colloquialisms and typical misspellings you’re used to the individual making. This is because machine learning techniques have profiled the ‘source’ of the phish and present an authentic seeming message. If this technique becomes advanced, scaled endlessly through automation, and frequently deployed, it will be necessary, but simply impossible, to verify the authenticity of every message and communication.

The mere existence of the technique (and others like it) will cast doubt on every piece of information you encounter every day.

But it gets worse.

Since GANs are starting to create authentic seeming video, we can imagine horror scenarios involving video of Putin or Trump or Kim Jong Un declaring war. I won’t dwell too much on these issues here, as I’ve previously posted on trust and authenticity, technology and society, freedom and AI, AI and human rights. Needless to say, things are getting much worse.

Part of the problem lies in the incentives that platform companies have for sustaining user engagement. We know that fake news spreads more widely than truth online. This tends to lead to promotion of sensationalist content and leaves the door wide open for malicious agents to leverage an attentive and psychologically profiled audience. The really big threat is when intelligent targeting (the automated laser phishing above) is combined with dangerous fake content.

These techniques (and many others) have not been widely deployed yet, and by ‘widely’ I mean that most of the digital content we encounter is not yet manipulated. But the productive potential of digital methods and the depth of insight about targets gleaned from shared user data is not bound by human limits.

We depend on the internet and digital content for almost everything we do or believe. Very soon more content will be fake than real. That is not an exaggeration. I’ll say it again, very soon more content will be fake than real. What will we make of that? Without true information we cannot track the world, we cannot progress.

A real possibility is that we come to question everything, even the true and useful things, and become apathetic toward reality by default.

That is the infopocalypse.

Tracking problems in our environment

It is critical for our long-term survival, success and wellbeing, that the information we obtain tracks the true challenges in our environment. If there is a mismatch between what we think is true and what really is true, then we will suffer as individuals and a species (think climate denial vs actual rising temperatures).

If we believe that certain kinds of beliefs and institutions are in our best long-term interests when they are not then we are screwed.

Bad information could lead to maladaptation and potentially to extinction. This is especially true if the processes that are leading us to believe the maladaptive information are impervious to change. There are a number of reasons why this might be so. The processes might be leveraging our cognitive biases, or they may be sustained by powerful automated entities or they may quell our desire for change through apparent reward.

There are many imaginable scenarios where the information we consume is almost all no good for us, it is ‘non-fitness tracking’ yet we lap it up anyway.

We’re seeing this endemically in the US at the moment. The very individuals who stand to lose the most from Trump’s health reform are the most vocal supporters of his policies. The very individuals who stand to gain most from a progressive agenda are sending pipe bombs in the mail.

Civil disorder and outright conflict are only (excuse the pun) a stones throw away.

This is the result of all the dynamics I’ve outlined above. Hard won rights, social progress, and stability are being eroded, and that will mean we forgo future value because if the world taught us anything in the 20th Century it’s that…

… peace is profitable.

If we can’t shake ourselves out of the trajectory we are on, then the trajectory is ‘evolutionarily stable’ to use Dawkins’ term from 1976. And to quote, ‘an evolutionarily stable strategy that leads to extinction… leads to extinction’.

This is not hyberbole, because as noted above, hothouse earth is an extinction possibility, nuclear war is an extinction possibility. If the rhetoric and emerging information manipulation techniques take us down one of these paths then that is our fate.

To reiterate, the threat of an infopocalypse is more pressing and more imminent than the threat of climate change, and we must address it immediately, with substantial resource investment, otherwise malicious content creating defectors will win.

As we advance technologically, we need to show restraint and patience and mitigate risks. This means a little research, policy and action, taken thoughtfully, rather than rushing to the precipice.

The battle against system perturbation and risk is an ongoing one, and many of the existing risks have not yet been satisfactorily mitigated. Nuclear war is a stand out contender for greatest as yet unmitigated threat (see my previous post on how we can keep nuclear weapons but eliminate the existential threat).

Ultimately, a measured approach will result in the greatest area under the future value curve.

So what should we do?

I feel like all the existing theory that I have outlined and linked to above is even more relevant today then when it was first published. I also feel like there are not enough people with broad generalist knowledge in these domains to see the big picture here. The threats are imminent, they are significant, and yet with few exceptions, they remain unseen.

We have the evolution theory, information dynamic theory, cognitive bias theory, and machine learning theory to understand and address these issues right now. But that fight needs resourcing and it needs to be communicated so a wider population understands the risks.

Solutions to this crisis, just like solutions to climate change will be multifaceted.

- In the first instance we need more awareness that there is a problem. This will involve informing the public, technical debate, writing up horizon scans, and teaching in schools.

- Children need to grow up with information literacy. I don’t mean just how to interpret a media text, or how to create digital content. I mean they need to learn how to distinguish real from fake, and how information spreads due to the system of psychological heuristics, network structure, frequency and source biases, and the content appeal of certain kinds of information. These are critical skills in a complex information environment and we have not yet evolved defenses against the current threats.

- We need to harness metadata and network patterns to automatically watermark content and develop a ‘healthy content’ labelling system akin to healthy food labels, to inform consumers of how and why pieces of information have spread. We need to teach this labelling system widely. We need to fix fake news. (I’ve recently submitted a proposal to a philanthropically funded competition for research funding to contribute to exactly that project. And I have more ideas if others out there can help fund the research)

- We need mechanisms, such as blockchain identity verification to subvert laser phishing.

- We need to outlaw the impersonation of humans in text, image, audio or video.

- We need to be vigilant to the technology of deception.

- We need to consider the sources of our information and fight back with facts.

- We need to reject the information polluting politics of populism.

- We need to invest in cryptographic verification of images and audio.

- We need to respect human rights whenever we deploy digital content.

- We also need a local NZ summit on information pollution and reality apathy.

Summary

More needs to be done to ensure that activity at a local and global level is targeted rationally towards the most important issues and the most destabilizing risks. This means a rational calculus of the likely impact of various threats to society and the resources required for mitigation.

Looking at the kinds of issues that today ‘make the front page’ shows that this is clearly not happening at present (‘the Koru Club was full’ – I mean seriously!). And ironically the reasons for this are the very dynamics and processes of information appeal, dissemination and uptake that I’ve outlined above.

A significant amount is known about cultural informational ‘microevolutionary’ processes (both psychological and network-mediated) and it’s time we put this theory to work to solve our looming infopocalypse.

I am more than happy to speak pro bono or as a guest lecturer on these issues of catastrophic risk, the threat of digital content, or information evolution and cognitive biases.

If any organizations, think tanks, policy centers, or businesses wish to know more then please get in touch.

I am working on academic papers about digital content threat, catastrophic risk mitigation, and so on. However, the information threat is emerging faster than it can be published on.

Please fill out my contact form to get in touch.

Selected Further Reading:

Author’s related shorter blogs:

The problem of Trust and Authenticity

Accessible journalism:

Helbing (2017) Will democracy survive big data and artificial intelligence

Warzel (2018) Fake news and an information apocalypse

Academic research:

Mesoudi (2017) Prospects for a science of cultural evolution

Creanza (2017) How culture evolves and why it matters

Acerbi (2016) A cultural evolution approach to digital media

Author’s article on AI Policy (2017) Rapid developments in AI

Author’s article on memetics (2008) The case for memes

Author’s MA thesis (2008) on Human Culture and Cognition

As this is paid research can you please have open disclosure on who your client is for each of your research postings.

Much of your research is biased and the reader should know who has paid for it.

LikeLike

Hi ‘Joe’ thanks for the comment. As the first post in this blog stated it is an ideas blog, I post my ideas here (see: https://adaptresearchwriting.com/2017/08/01/ideas-blog/ ). I started this post stating ‘I’ consider this problem important, and I cited ‘my’ Masters thesis. There is no funding support for this post. It’s an opinion piece and clearly marked as such in the first sentence. When my posts are summaries of published peer reviewed research that I have conducted I link to the publication, and you will find funding information there if there was any funder. Usually there is not. I am an independent academic and the research is my own. I do conduct paid work for clients (as listed on my home page) but I do not usually blog about that work, as it is for the client. It would be great if you could identify the areas you think are biased, and I can tell you whether that is opinion or based on research. It would be great to know who you are too, so I can estimate your own biases.

LikeLike

Thanks for the reply.

The whole flavour of your site is that is is a company that carries out research for paid clients.

This goes through all your pages on the site, and you list a number of Universities that give it the flavour of scientific credibility.

My company builds online sites for our clients, many of who are large corporations.

One of our clients came across your article and contacted me in bit of a panic… we are still at the early stages of building their new online presence in Web3.0 and they are excited but nervous about it as there is a considerable cost involved.

I went to your site and had a good look around it, and it does come across as a bona fide scientific research service with some very credible affiliate organisations listed.

So… I followed the normal practice of “follow the money”… and found I could not find any disclosure on that.

This goes against a whole bunch of ethical practice in the scientific publishing field.

So yes… you can probably talk your way out of it on a technical point, but it would really be better for you to have two quite separate sites, one dealing with paid research and one dealing with opinion blogs… as this one is confusing as to whether the reader is reading bona fide research or an opinion blog

I’m having to now do some firefighting with our client which I’m not pleased about as they are a client with a history with us and now want to put good funding into moving on to a new Web3.0 presence.

LikeLike